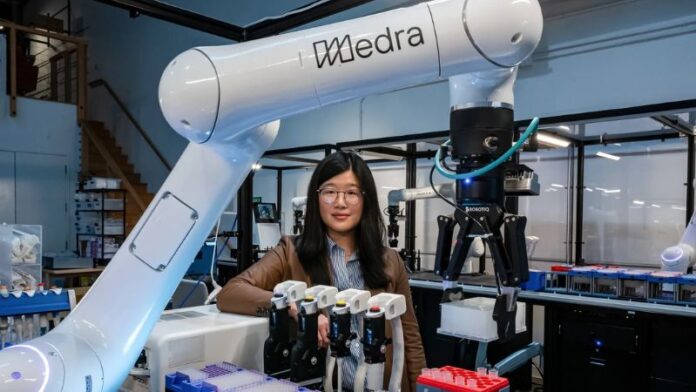

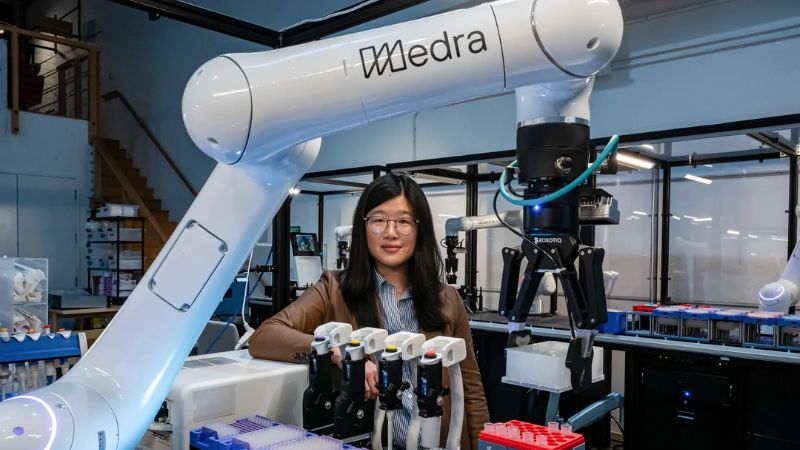

Medra, which programs robots with artificial intelligence to conduct and improve biological experiments, has raised $52 million to build what it says will be one of the largest autonomous labs in the United States.

The deal brings Medra’s total funding to $63 million, including pre-seed and seed financing. Existing investor Human Capital led the new round, which came together just weeks after the company started talking publicly about its work in September, Chief Executive Officer Michelle Lee said in an interview at the company’s San Francisco lab. The company recently signed an agreement to work on early drug discovery with Genentech, a subsidiary of pharmaceutical giant Roche Holding AG.

Read More – SafeinHome Raises $25M in Series D Funding

Lee, who earned a doctorate in robotics from Stanford University and had planned to join New York University’s faculty, left academia in 2022 to start Medra. Her bet is that “physical AI” — robots with sensors and cameras tied to software that allows them to process what they’re doing, log precise data and adapt — will dramatically accelerate scientific progress.

“There’s been industrial automation in life sciences for decades,” she said, referring to robots that repeat preprogrammed motions. “But no one has been working on physical AI. And if we can succeed, it’ll completely transform how drug discovery and life-science research is done.”

Medra is growing out of a warehouse space in San Francisco’s Mission district, where a series of steel tables house robotic arms that pinch, spin, drip and mix to manipulate cells and lab chemicals. The company doesn’t make the hardware, sensors or cameras; Medra has developed software that is intended to allow a scientist to direct the robot using natural language, the way they would with a chatbot, and brainstorm ways to solve problems and adjust lab work.

The robots are programmed to operate independently once there’s a task, with detailed notes of its actions. The software tracks every detail of an experiment: the angle of a pipette, how deep it dips into a well, the time between adding reagents, the speed of mixing. That data is fed into what Lee calls a vision-language-lab-action model, which lets the system reason about what happened in an experiment and propose changes.

Read More – Spryker Raises New Funding